Extending the Concept of Agency to Artificial Intelligence

In philosophy, agency refers to the ability to act with intention and independence. Hegel, a German philosopher, argued that agency is more than just acting. It is also about becoming free by acting in connection with others and following moral values.

Today, agency can also be observed in advanced systems called agentic AI. These systems do more than just automate tasks. They adapt to different situations, plan and work toward goals. Some even have their own simple “preferences”. Although agentic AI lacks consciousness and moral intent, AI philosopher Yuk Hui explains that it can still mimic agency through its goal-driven behaviour.

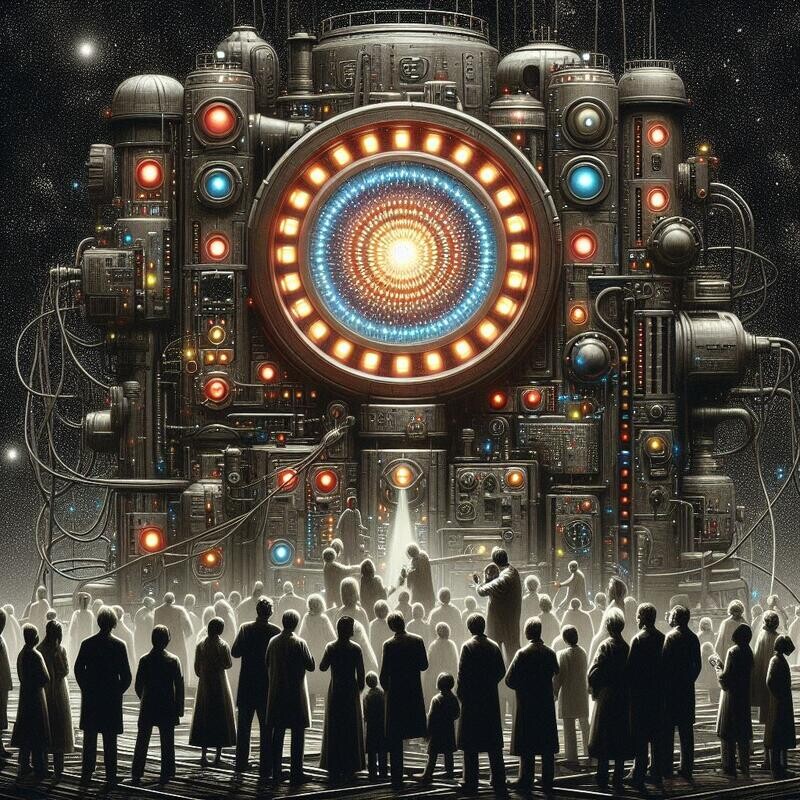

This brings us to AI agents, which are the practical expression of agentic AI. Autonomous vehicles and stock-market trading bots are all examples of AI agents. These systems often work with little human input and control. This raises an important question: Who is responsible for the harm or mistakes an AI agent makes?

We are facing a new reality. Machines are not becoming people but are becoming actors in systems that impact us. To respond, we need to rethink some key philosophical concepts. Agency is one such concept that is no longer just a human trait but extends to technology.

There is a new kind of “actor” in the world. Are we ready to rethink philosophy to include it?